Human-centered neural reasoning for subjective content processing: Hate speech, emotions, and humor

Abstract

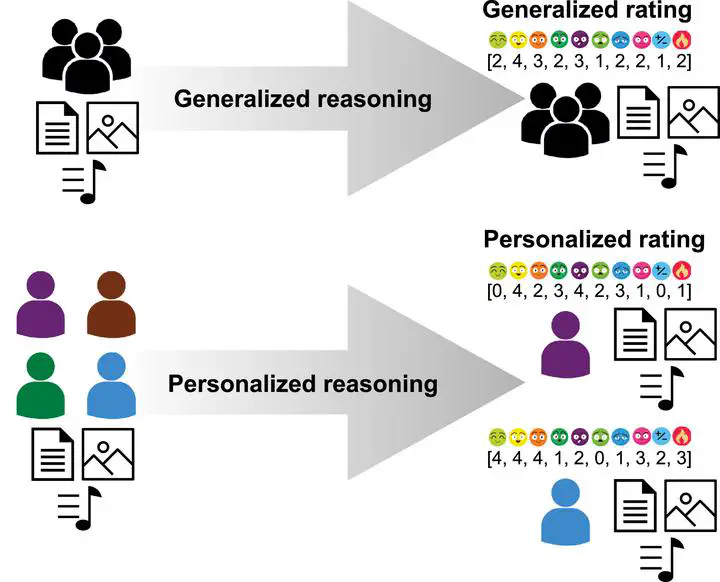

Some tasks in content processing, e.g., natural language processing (NLP), like hate or offensive speech and emotional or funny text detection, are subjective by nature. Each human may perceive some content individually. The existing reasoning methods commonly rely on agreed output values, the same for all recipients. We propose fundamentally different — personalized solutions applicable to any subjective NLP task. Our five new deep learning models take into account not only the textual content but also the opinions and beliefs of a given person. They differ in their approaches to learning Human Bias (HuBi) and fusion with content (text) representation. The experiments were carried out on 14 tasks related to offensive, emotional, and humorous texts. Our personalized HuBi methods radically outperformed the generalized ones for all NLP problems. Personalization also has a greater impact on reasoning quality than commonly explored pre-trained and fine-tuned language models. We discovered a high correlation between human bias calculated using our dedicated formula and that learned by the model. Multi-task solutions achieved better outcomes than single-task architectures. Human and word embeddings also provided additional insights.